Python's advanced capabilities are revealed! Explore the complex yet intriguing concepts of concurrency, multithreading, and parallelism, and learn how to supercharge your Python applications.

More...

The successful design and execution of software hinges on its efficiency and speed. One language that offers robust capabilities in this regard is Python. It is one of the high-level programming languages which has been rapidly gaining popularity among developers due to its advanced features like concurrency, multithreading in Python, and parallelism.

We delve into these complex topics, showcasing how our Python developers at Bluebird have mastered these aspects of Python programming to develop robust, responsive, and efficient applications.

Concurrency vs. Multithreading in Python: Demystifying the Concepts

When it comes to Python, or programming in general, concurrency and multithreading in Python are fundamental yet often misunderstood.

Concurrency refers to managing multiple tasks that have overlapping execution periods. It's like a chef in a kitchen preparing several dishes simultaneously: chopping vegetables for a salad while waiting for the pasta to boil. Concurrency doesn't necessarily mean these tasks are happening simultaneously; it is more about dealing with multiple tasks, making sure they all progress.

Multithreading in Python is a particular form of concurrency where the execution path of a single process is divided into two or more threads. These threads are independently executable, allowing tasks to run in overlapping time periods.

Parallelism vs Concurrency: Unveiling the Difference

Although 'concurrency' and 'parallelism' might sound synonymous, they are significantly different in execution and purpose in computing.

Concurrency, as we explained, focuses on managing multiple tasks in overlapping periods, irrespective of whether the tasks are actually running at the same instant. It's more about structure and organization.

In contrast, parallelism is about simultaneously executing multiple tasks. It is more about improving performance. Imagine the same kitchen scenario, but now with four chefs, each cooking their own pasta dish. They are all cooking independently and simultaneously. That's parallelism.

In simple terms, concurrency is about dealing with multiple things at once, while parallelism is about doing multiple things at once. Both concepts play crucial roles in enhancing the responsiveness and performance of Python applications, particularly in tasks that can be broken down and executed independently.

Digging Deeper into Parallelism

Parallelism, another crucial concept that Python developers should grasp, involves breaking down a task into smaller sub-tasks that can be processed simultaneously. These tasks typically run on separate CPU cores. This approach proves especially beneficial for CPU-bound tasks requiring heavy computations.

By distributing tasks across multiple cores, the execution time of a process can be significantly reduced, making programs faster and more efficient. Python offers the multiprocessing module as a potent tool for achieving parallelism.

Python Mechanisms for Concurrency, Multithreading, and Parallelism

Python Threading

Python threads are units of work where one or more functions can execute independently of the rest of the program. The results are typically aggregated by waiting for all threads to run to completion.

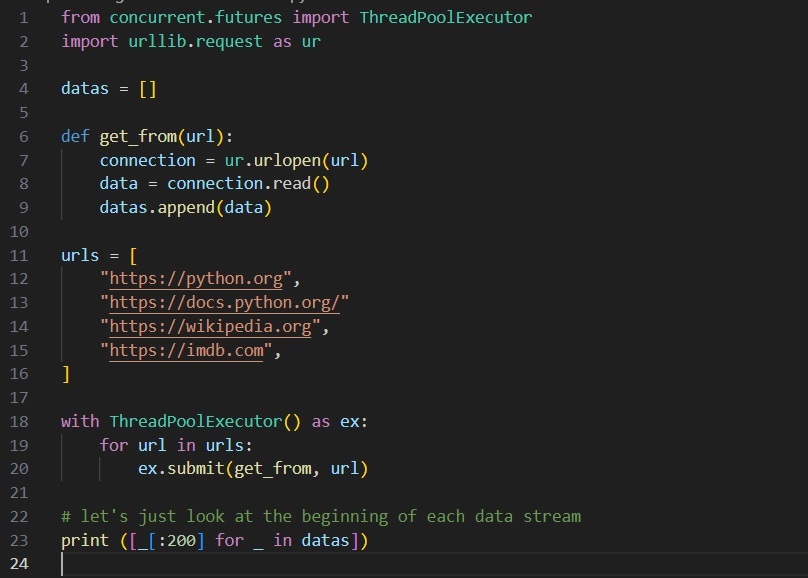

Consider the following example where Python handles threading to read data from multiple URLs at once:

Here, Python uses the ThreadPoolExecutor for running threads. This mechanism could be used to submit numerous URLs, without causing a significant slowdown, as each thread yields to the others whenever it's only waiting for a remote server to respond.

Python Coroutines and Async

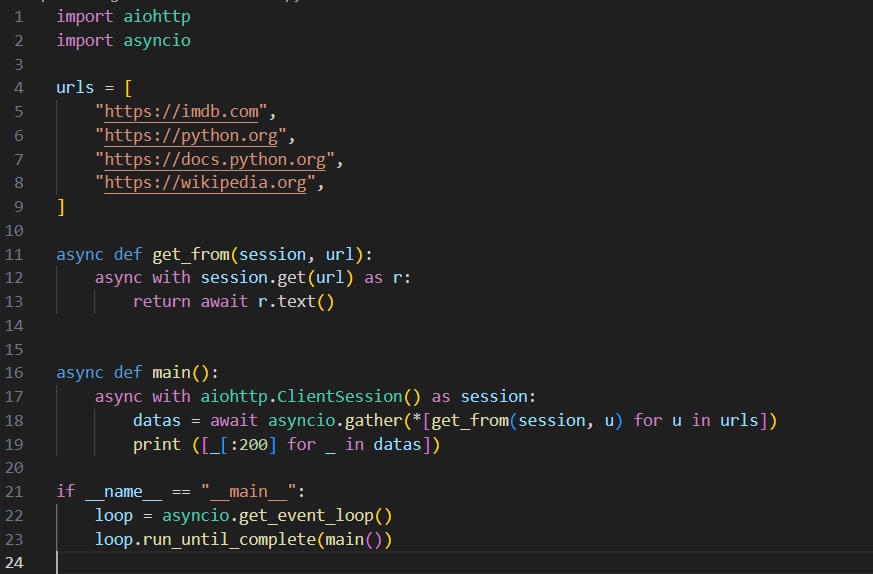

Coroutines or async offer a different approach to execute functions concurrently in Python. Managed by the Python runtime, coroutines require far less overhead than threads. Here is an example of async handling a network request in Python:

Coroutines, like get_from() in the example, can run side by side with other coroutines. The function asyncio.gather() launches several coroutines, waits until they all run to completion, and then returns their aggregated results as a list.

Python Multiprocessing

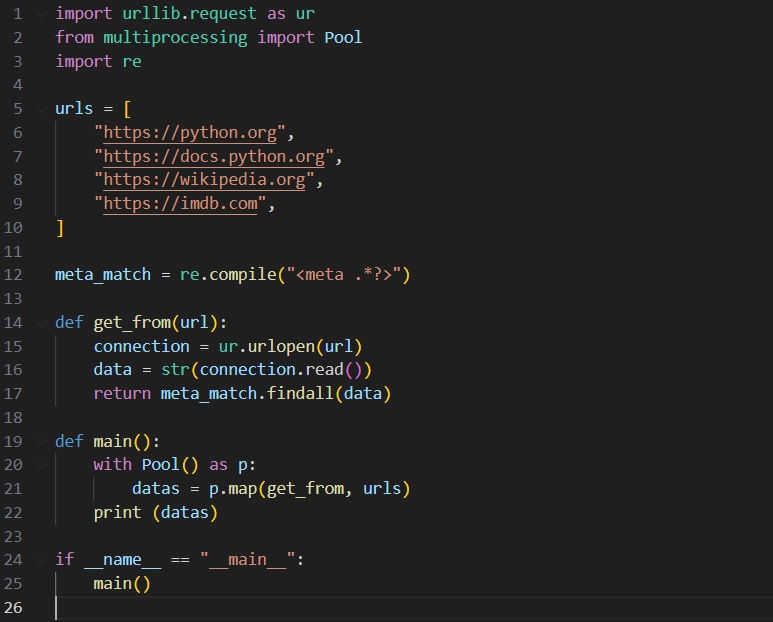

Multiprocessing allows the concurrent execution of CPU-intensive tasks by launching multiple, independent copies of the Python runtime. Here's an example of a web-reading script that uses multiprocessing:

In the above example, Pool() is an object representing a reusable group of processes. The .map() function allows you to submit a function to run across these processes and an iterable to distribute between each instance of the function.

Deciding the Right Concurrency Model for Your Python Application

When developing applications in Python, it is crucial to choose the right concurrency model that best fits the nature of the tasks at hand.

For long-running, CPU-intensive operations, multiprocessing is typically the best choice. It allows for maximum CPU utilization, especially when working within the Python runtime, without being constrained to a single instance that blocks when doing CPU-based work.

For operations that don’t involve the CPU but require waiting on an external resource, such as a network call, either threading or coroutines can be used. While the efficiency difference between the two is insignificant when dealing with a few tasks at once, coroutines prove to be more efficient when dealing with thousands of tasks. This is because it’s easier for the runtime to manage large numbers of coroutines than large numbers of threads.

It's also worth noting that coroutines work best when using libraries that are async-friendly, such as aiohttp.

The Expertise of Bluebird Python Developers

Our Python developers at Bluebird are adept at handling these complex aspects of Python programming. They skillfully leverage Python's robust tools and libraries to manage concurrency, multithreading, and parallelism effectively. Their mastery of these complex topics ensures that the applications they develop are not just robust and responsive.