Python interview questions and answers: Discover Bluebird's comprehensive guide for acing your next Python developer job interview.

More...

We know how important it is to be well-prepared for interviews, so this article is meant to be your go-to guide for learning how to accomplish Python interviews.

It can be hard to find your way around Python's vast landscape, but our carefully chosen collection of Python interview questions and answers will make sure you are ready to answer any Python-related question. With a wide range of topics covered, such as Python basics, data structures, object-oriented programming, libraries, frameworks, and soft skills, you can feel sure that you will do well during the interview process.

This set of Python interview questions and answers will help employers evaluate a candidate's technical skills, ability to solve problems, and ability to work well with others. By asking these questions during the interview process, you can find the best people for your team and make sure that your organization's Python development goes well.

Enter our huge list of Python interview questions and answers to get the confidence you need to ace your next Python job or find the best person for your team.

Happy interviewing!

Python Fundamentals

1. How are mutable and immutable data types different in Python?

Mutable data types can be changed after they are created, while immutable data types cannot.

Mutable data types include lists, dictionaries, and sets. Immutable data types include strings, tuples, and frozensets.

Because immutable objects cannot be changed, they are generally more memory-efficient and faster for certain operations.

2. Explain the difference between a list and a tuple.

A list is a mutable, ordered collection of items, whereas a tuple is an immutable, ordered collection of items.

Lists are defined using square brackets [ ], while tuples use parentheses ( ).

Lists are more suitable for cases where the sequence needs to be modified, whereas tuples are useful for creating fixed, unchanging sequences.

3. What is the use of the else clause in a Python loop?

The 'else' clause in a Python loop is executed when the loop finishes iterating through the items (in a 'for' loop) or when the condition becomes false (in a 'while' loop). The 'else' clause is not executed if the loop is terminated by a 'break' statement.

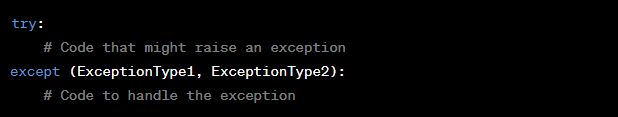

4. Describe how to handle exceptions in Python using try-except blocks.

To handle exceptions in Python, wrap the code that may raise an exception inside a try block. Then, use an except block to catch the exception and specify what actions to take when the exception occurs. You can catch multiple exception types by specifying them in a tuple. For example:

5. What is the difference between 'is' and '==' operators in Python?

The == operator compares the values of two objects to see if they are equal, whereas the is operator compares the memory addresses of the two objects to see if they are the same.

In other words, == checks for value equality, while is checks for object identity.

6. Explain the use of the lambda keyword in Python.

The lambda keyword is used to create small, anonymous functions in Python. Lambda functions can have any number of arguments but only a single expression, which is returned as the result. They are often used as arguments for higher-order functions, such as map() or filter(). For example:

7. How does Python's Global Interpreter Lock (GIL) impact multithreading?

The GIL is a mechanism that prevents multiple native threads from executing Python bytecodes simultaneously in a single process. As a result, multithreaded Python programs using the default CPython interpreter may not fully utilize multiple CPU cores, leading to reduced performance. However, the GIL does not impact I/O-bound tasks or using multiprocessing, which creates separate processes with their own GIL.

8. Describe the differences between shallow and deep copying in Python.

Shallow copying creates a new object, but the new object only contains references to the elements of the original object. In contrast, deep copying creates a new object and recursively copies all the elements and their nested objects. The copy module provides the copy() function for shallow copying and the deepcopy() function for deep copying.

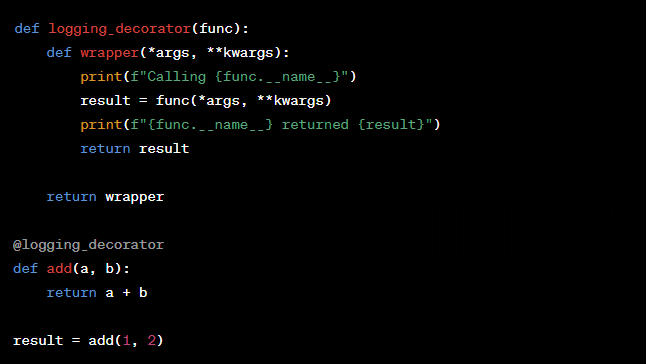

9. What are decorators, and how are they used in Python?

Decorators are a powerful feature in Python that allows you to modify or extend the behavior of functions or methods without changing their code. They are a form of metaprogramming and can be used for a variety of purposes, such as code instrumentation, access control, logging, or memoization.

A decorator is essentially a higher-order function that takes a function (or method) as input and returns a new function (or method) with the desired modifications. In Python, decorators are applied to functions or methods using the '@' syntax.

10. How does Python manage memory for variables and objects?

Python uses a combination of reference counting and garbage collection to manage memory for variables and objects. The memory management process in Python can be divided into three main components: memory allocation, reference counting, and garbage collection.

Memory allocation: When you create a new variable or object in Python, the interpreter allocates memory for it. Python has a built-in memory manager that handles the allocation and deallocation of memory for objects.

Reference counting: Python uses reference counting to keep track of the number of references to an object. Each time an object is assigned to a variable or included in a data structure, its reference count is incremented. When the reference count drops to zero, the object is no longer accessible, and its memory can be reclaimed.

Garbage collection: While reference counting works well for most scenarios, it cannot handle circular references, where two or more objects reference each other, creating a reference cycle. In such cases, the reference count never drops to zero, and the memory occupied by these objects is never released, leading to a memory leak. To solve this issue, Python has a cyclic garbage collector that detects and collects objects involved in reference cycles. This garbage collector runs periodically in the background and frees up memory by breaking reference cycles.

Data structures and algorithms

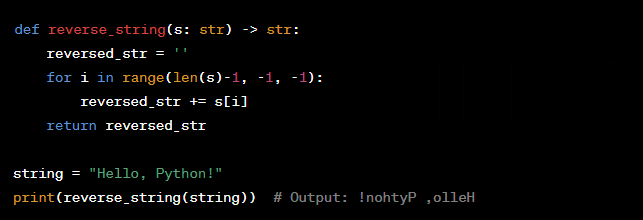

11. Implement a Python function to reverse a string without using built-in functions.

12. What is the time complexity of inserting an element at the beginning of a list in Python?

The time complexity of inserting an element at the beginning of a list in Python is O(n), where n is the number of elements in the list. This is because all existing elements need to be shifted to the right to make room for the new element.

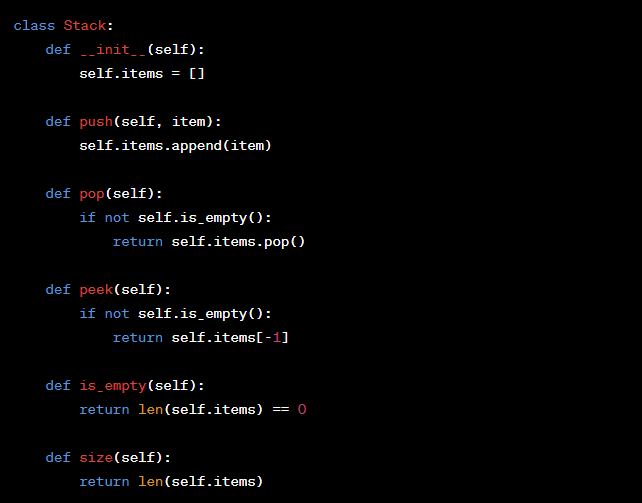

13. How do you implement a stack using Python lists?

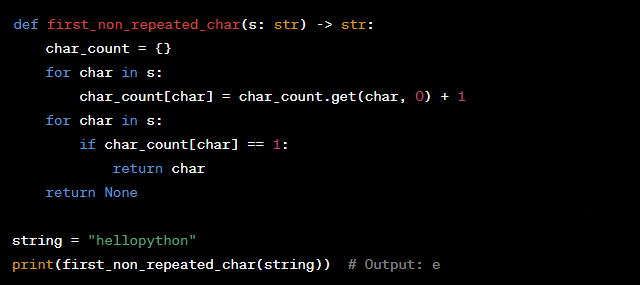

14. Write a Python function to find the first non-repeated character in a string.

15. Explain the difference between a depth-first search and a breadth-first search in a graph.

Depth-first search (DFS) is a graph traversal algorithm that explores as far as possible along a branch before backtracking. It visits a vertex, then recursively visits its adjacent vertices that have not been visited before. DFS can be implemented using recursion or an explicit stack data structure. DFS is useful in solving problems that require traversing the graph along a specific path or finding connected components.

Breadth-first search (BFS) is another graph traversal algorithm that visits all the vertices at the same level before moving on to the next level. BFS uses a queue data structure to maintain the vertices to be visited. BFS is useful in solving problems that require finding the shortest path or determining the minimum number of steps to reach a target vertex from the source vertex.

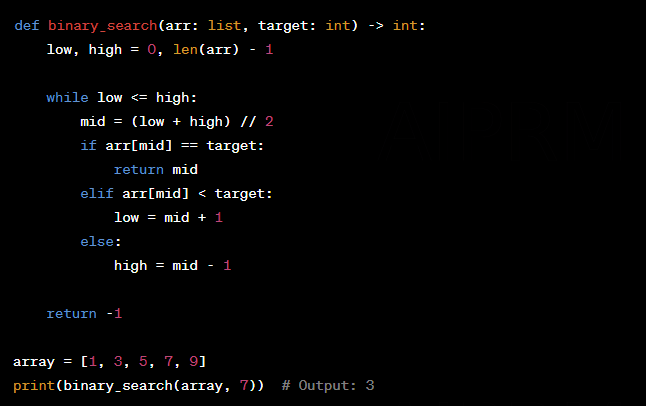

16. How do you implement a binary search algorithm in Python?

17. Describe the process of a merge sort and its time complexity.

Merge sort is a divide-and-conquer algorithm that recursively splits the input array into two equal halves, sorts each half, and then merges the sorted halves to produce the final sorted array. The merge step combines the two sorted halves by comparing their elements and inserting the smaller element first. The time complexity of merge sort is O(n * log(n)), where n is the number of elements in the array. This complexity holds for the average, worst, and best cases, making merge sort a stable and efficient sorting algorithm, especially for large datasets or datasets with a significant number of duplicate values.

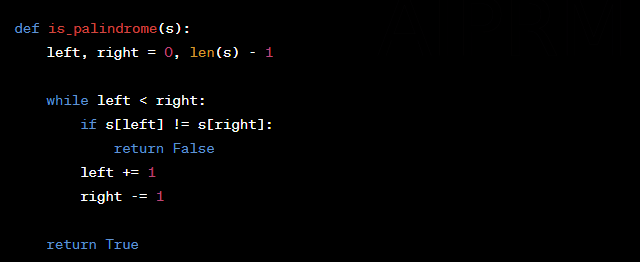

18. Implement a function to check if a given string is a palindrome.

19. Explain the concept of dynamic programming and provide an example.

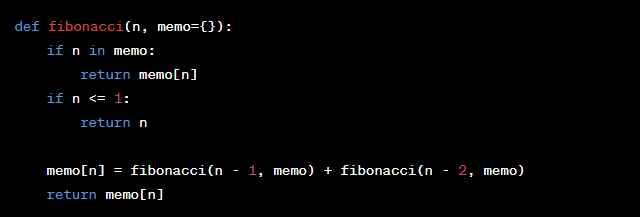

Dynamic programming is an optimization technique used in problem-solving and algorithm design. It solves problems by breaking them down into smaller overlapping subproblems and using their solutions to build the solution for the original problem. Dynamic programming typically involves memoization or tabulation to store the results of subproblems to avoid redundant calculations.

An example of dynamic programming is the Fibonacci sequence. The naive recursive approach has exponential time complexity. By using dynamic programming, we can reduce the time complexity to linear.

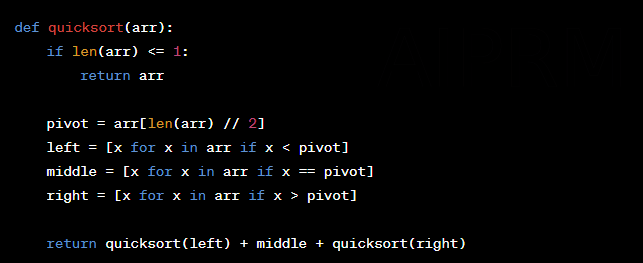

20. Describe the quicksort algorithm and discuss its average and worst-case time complexities.

Quicksort is a divide-and-conquer sorting algorithm that selects a 'pivot' element from the array and partitions the other elements into two groups, those less than the pivot and those greater than the pivot. The process is then recursively applied to the sub-arrays. The choice of the pivot element can significantly impact the algorithm's performance.

The average-case time complexity of quicksort is O(n * log(n)), where n is the number of elements in the array. However, the worst-case time complexity is O(n^2), which occurs when the pivot selection results in a highly unbalanced partitioning (e.g., when the input array is already sorted and the pivot is chosen as the first or last element). To mitigate this, randomized quicksort or the median-of-three method can be used to choose a better pivot.

Here's an implementation of quicksort in Python:

In this implementation, the pivot is chosen as the middle element of the array. The elements less than, equal to, and greater than the pivot are stored in separate lists, which are then recursively sorted using the quicksort function. The final sorted array is obtained by concatenating the sorted left, middle, and right lists.

Object-oriented programming (OOP)

21. Explain the four principles of object-oriented programming.

The four principles of object-oriented programming (OOP) are encapsulation, inheritance, polymorphism, and abstraction. These principles help organize code, promote reusability, and improve maintainability.

- 1Encapsulation: This principle involves bundling related data and behaviors within a single unit, the class. It also includes restricting access to certain class members to ensure data integrity and prevent unintended modifications.

- 2Inheritance: Inheritance allows a class to inherit properties and methods from a parent (base) class. This enables code reusability and helps create a more organized hierarchy of classes with shared and specialized functionality.

- 3Polymorphism: Polymorphism allows a single interface to represent different types or behaviors. It enables the same function or method name to be used for different implementations, which makes it easier to extend or modify code without affecting existing functionality.

- 4Abstraction: Abstraction focuses on simplifying complex systems by breaking them down into smaller, more manageable components. It involves hiding implementation details and exposing only essential features, making it easier to understand and work with the system.

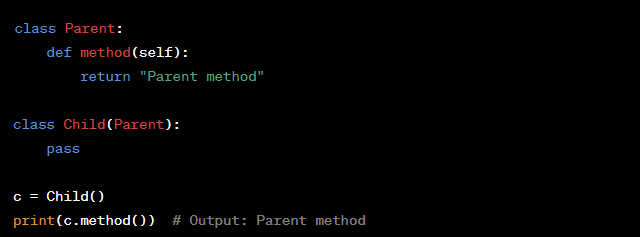

22. How do you achieve inheritance in Python?

Inheritance is achieved in Python by defining a new class that inherits properties and methods from an existing (parent) class. This is done by passing the parent class as an argument during the new class definition. For example:

In this example, the Child class inherits the method from the Parent class. You can also override or extend the parent class's methods in the child class, as needed.

23. What is the difference between a class method, an instance method, and a static method in Python?

- 1Instance methods: These are the most common type of methods in Python classes. They take the instance of the class (usually referred to as self) as their first argument and can access and modify instance attributes.

- 2Class methods: These methods are bound to the class and not the instance. They take the class itself as their first argument (usually referred to as cls). Class methods can't access or modify instance-specific data but can modify class-level data shared across all instances.

- 3Static methods: These methods don't take a special first argument (neither self nor cls). They can't access or modify instance or class data directly. Static methods act like regular functions but are defined within the class for organizational purposes.

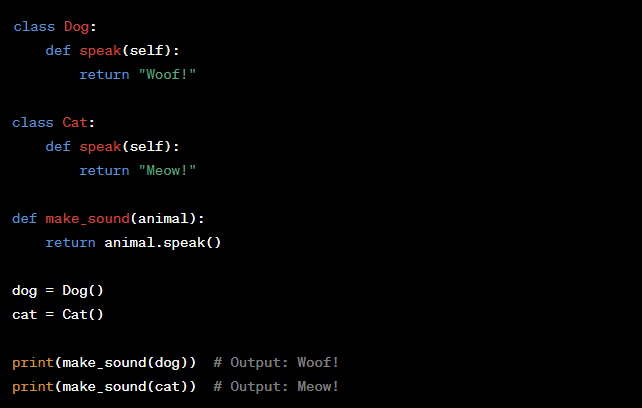

24. Explain the concept of polymorphism with a Python example.

Polymorphism allows different classes to share the same method or function name but have different implementations. For example:

In this example, the Dog and Cat classes both have a speak method with different implementations. The make_sound function takes an instance of either class and calls its speak method, demonstrating polymorphism.

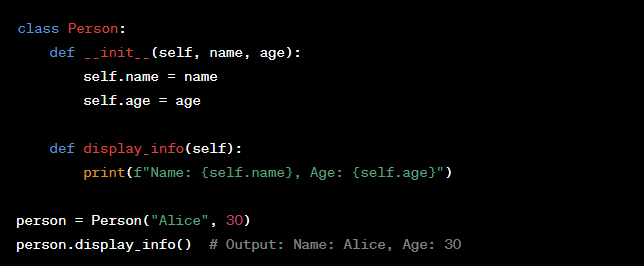

25. What is the purpose of the __init__ method in a Python class?

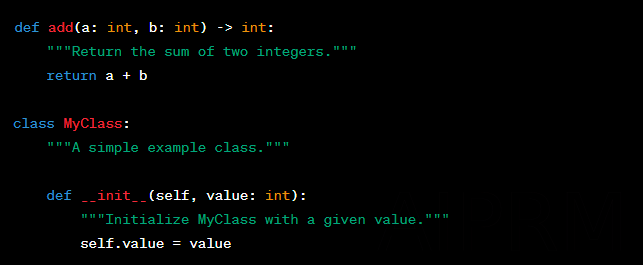

The __init__ method is a special method in Python classes known as a constructor. It is called when an instance of the class is created. The __init__ method is typically used to initialize instance attributes and perform any setup required for the instance. For example:

In this example, the __init__ method initializes the name and age attributes for the Person class.

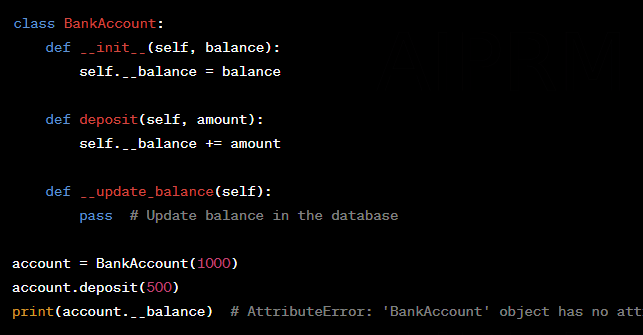

26. How do you implement encapsulation in Python?

Encapsulation is achieved in Python by using private and protected attributes and methods. By convention, a single underscore prefix (e.g., _attribute) indicates a protected attribute or method, while a double underscore prefix (e.g., __attribute) makes it private. Private attributes and methods are name-mangled, making it harder to accidentally access or modify them.

In this example, the __balance attribute is private and the __update_balance method is private as well, enforcing encapsulation.

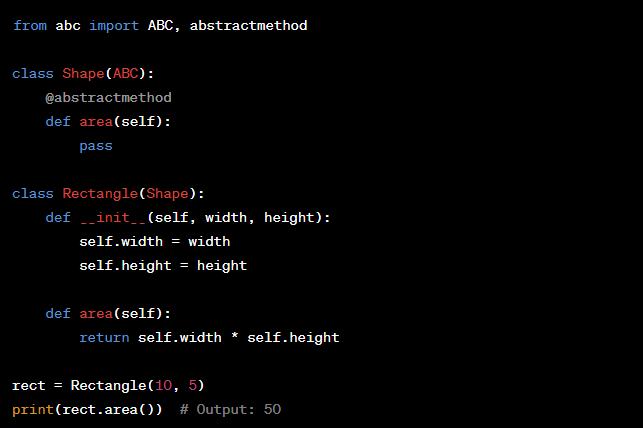

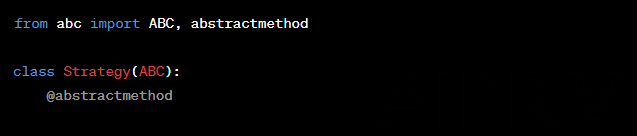

27. What are abstract classes and abstract methods in Python?

Abstract classes are classes that cannot be instantiated, and they are meant to be subclassed by other classes. Abstract methods are methods declared in an abstract class but don't have an implementation. Subclasses of the abstract class must provide an implementation for these methods.

In Python, you can define abstract classes and abstract methods using the abc module. To create an abstract class, inherit from ABC and use the @abstractmethod decorator for abstract methods:

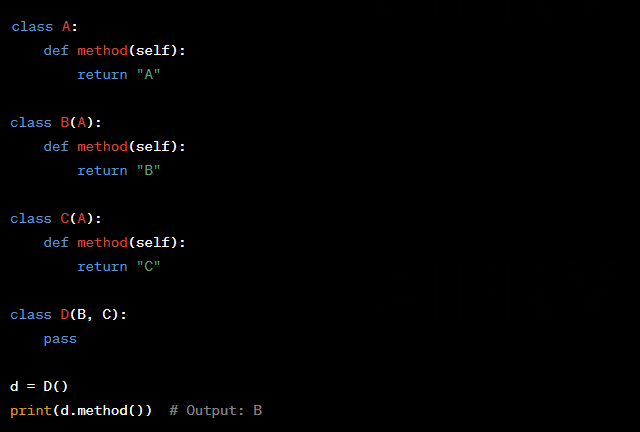

28. Describe the concept of multiple inheritance in Python and how it's resolved using the MRO algorithm.

Multiple inheritance is a feature in Python that allows a class to inherit from more than one parent class. The Method Resolution Order (MRO) algorithm determines the order in which the base classes are searched when looking for a method. Python uses the C3 Linearization algorithm to compute the MRO, which ensures a consistent order that respects the inheritance hierarchy and adheres to the monotonicity criterion.

For example:

In this example, the MRO order for class D is D → B → C → A, which determines that the method implementation from class B should be called.

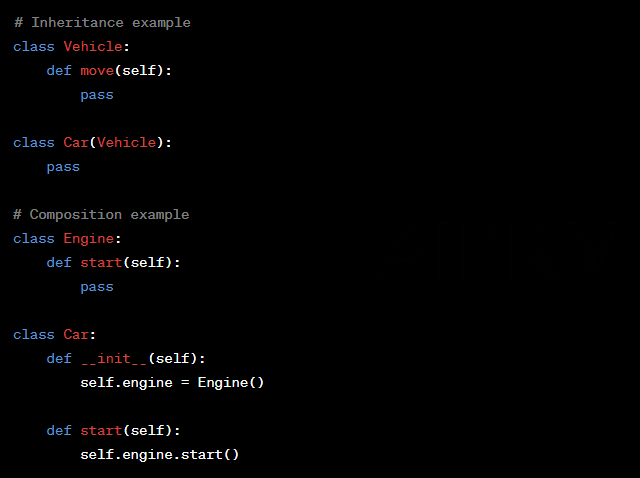

29. Explain the difference between composition and inheritance.

Inheritance is a relationship between classes where a class (subclass) inherits properties and methods from another class (parent or base class). Inheritance is a way to promote code reusability and create a hierarchy of related classes. Inheritance models the "is-a" relationship, meaning the subclass is a specialized version of the parent class.

Composition, on the other hand, is a relationship between objects where an object (the composite) contains one or more other objects (the components). Composition is a way to build complex objects by combining simpler ones, allowing you to create modular and more maintainable code. Composition models the "has-a" relationship, meaning the composite object contains or consists of other objects.

For example:

In the inheritance example, the Car class inherits from the Vehicle class. In the composition example, the Car class contains an instance of the Engine class as a component.

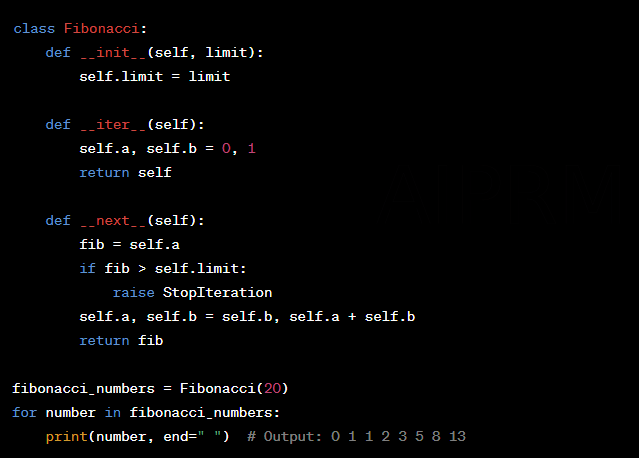

30. How do you create a custom iterator in Python?

To create a custom iterator in Python, you need to define an iterator class that implements the iterator protocol. The iterator protocol consists of two methods: __iter__() and __next__().

The __iter__() method should return the iterator object itself (usually self). The __next__() method should return the next value in the sequence or raise the StopIteration exception if there are no more items to return.

For example, let's create a custom iterator that generates Fibonacci numbers:

In this example, the Fibonacci class is a custom iterator that generates Fibonacci numbers up to a specified limit.

Libraries and frameworks

31. What are the main features of the NumPy library?

NumPy (Numerical Python) is a powerful library for numerical computing in Python. Its main features include:

- 1Efficient n-dimensional array object (ndarray) for handling large datasets and performing vectorized operations.

- 2Element-wise operations and broadcasting for performing mathematical operations on arrays without explicit loops.

- 3A wide range of mathematical functions, including linear algebra, statistical functions, and Fourier analysis.

- 4Built-in support for generating random numbers with various distributions.

- 5Compatibility with various data formats and easy integration with other scientific libraries like SciPy and Pandas.

32. How do you handle missing data using the Pandas library?

Pandas provides several methods for handling missing data in DataFrame and Series objects:

- 1Using the isna() or isnull() functions to detect missing values in the dataset.

- 2Dropping missing data using the dropna() method, with options for specifying the axis (rows or columns), how many missing values to tolerate, and whether to drop based on missing values in a specific column.

- 3Filling missing values using the fillna() method, with options for specifying a constant value, using forward or backward filling (propagating non-missing values), or interpolation.

- 4Interpolating missing values using the interpolate() method, with options for specifying the interpolation method (linear, polynomial, spline, etc.) and the direction (forward, backward, or both).

33. Explain the role of the Django ORM in a web application.

The Django Object-Relational Mapping (ORM) is a key component of the Django web framework that allows developers to interact with the database using Python objects and classes, rather than writing raw SQL queries. The main roles of the Django ORM include:

- 1Defining data models as Python classes, which can then be automatically translated into database tables.

- 2Providing a high-level, Pythonic API for querying the database, creating, updating, and deleting records.

- 3Handling database schema migrations, allowing developers to evolve the data model over time without manual SQL intervention.

- 4Supporting complex queries and aggregations through the QuerySet API.

- 5Enabling database independence by abstracting the underlying SQL dialects and supporting various database backends.

34. What is the purpose of the Flask microframework?

Flask is a lightweight and modular web framework for building web applications in Python. Its main purposes include:

- 1Providing a simple and flexible structure for building web applications quickly and efficiently.

- 2Supporting the development of small to medium-sized projects, where a full-fledged framework like Django might be too heavy or complex.

- 3Offering a minimalistic core that can be easily extended with plug-ins and libraries to add functionality as needed, such as database integration, authentication, or form handling.

- 4Simplifying the process of routing, handling HTTP requests and responses, and rendering templates.

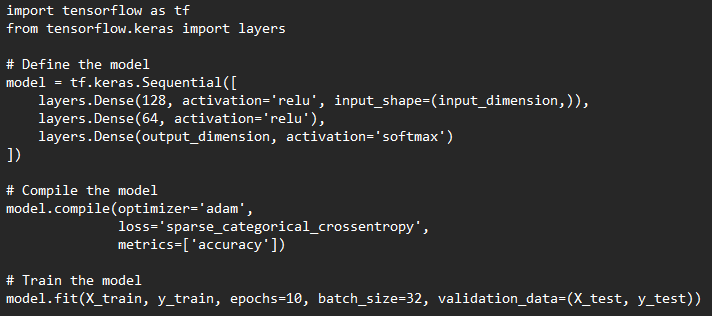

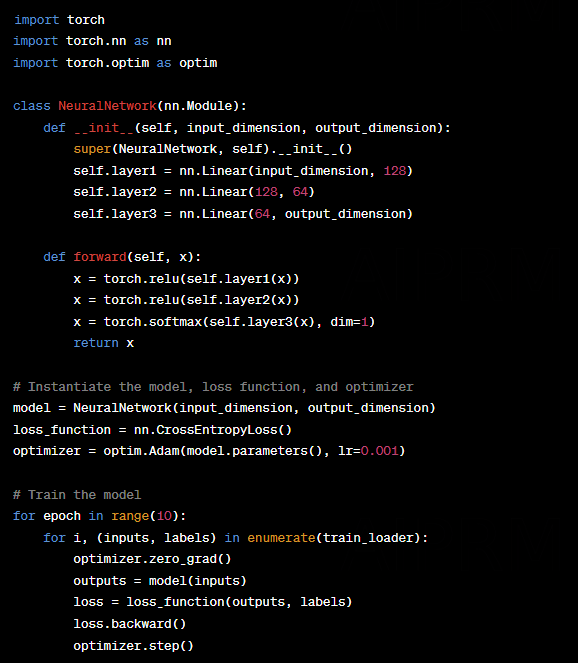

35. How do you create a basic neural network using TensorFlow or PyTorch?

Using TensorFlow:

Using PyTorch:

36. Describe the main components of the Scikit-learn library.

Scikit-learn is a popular Python library for machine learning and data analysis. Its main components include:

- 1Data preprocessing: Scikit-learn provides various tools for data preprocessing, such as scaling, normalization, encoding, and imputation of missing values.

- 2Feature extraction and selection: The library offers methods for extracting features from data, including text and images, and selecting the most relevant features for machine learning models.

- 3Model training and evaluation: Scikit-learn includes a wide range of supervised and unsupervised learning algorithms, such as regression, classification, clustering, and dimensionality reduction algorithms. It also provides tools for model evaluation and selection, including cross-validation, scoring metrics, and grid search.

- 4Model persistence and deployment: Scikit-learn allows you to save trained models using joblib or pickle for later use or deployment. It also integrates with other libraries and tools for deploying machine learning models, such as Flask or Django.

- 5Pipelines: Scikit-learn's pipeline feature allows you to chain together multiple data preprocessing steps and a model into a single object, simplifying the process of building, evaluating, and deploying models.

- 6Ensemble methods: The library supports various ensemble methods, such as bagging, boosting, and stacking, to combine the predictions of multiple base estimators and improve model performance.

- 7Utilities: Scikit-learn provides additional utilities for data handling, such as dataset splitting, data transformation, and dataset loading functions.

37. Explain how the requests library is used for making HTTP requests in Python.

The requests library is a popular Python library for making HTTP requests. It simplifies the process of working with HTTP by providing easy-to-use methods for sending and receiving data over the web. Here's an example of how to use the requests library:

- 1First, install the library using pip: pip install requests

- 2Import the library in your Python script.

- 3To make a GET request, use the requests.get() function.

- 4To make a POST request, use the requests.post() function.

- 5You can also pass additional parameters, such as headers, query parameters, and authentication information, to the request functions.

- 6The response object returned by the request functions contains information about the status code, headers, and content of the response. You can access this information using the attributes and methods of the response object, such as response.status_code, response.headers, and response.json().

38. What are the key features of the Matplotlib library for data visualization?

Matplotlib is a widely used Python library for creating static, animated, and interactive data visualizations. Its key features include:

- 1Multiple plot types: Matplotlib supports various plot types, such as line plots, scatter plots, bar plots, histograms, 3D plots, and more.

- 2Customizability: The library allows extensive customization of plot elements, such as colors, line styles, markers, labels, legends, and axes, to create visually appealing and informative visualizations.

- 3Integration with other libraries: Matplotlib integrates seamlessly with other data analysis and visualization libraries like NumPy, Pandas, and Seaborn.

- 4Multiple backends: Matplotlib supports different backends for rendering plots, such as inline display in Jupyter notebooks, interactive GUI windows, and saving plots to various file formats (PNG, SVG, PDF, etc.).

- 5Object-oriented API: The library provides an object-oriented API for creating and modifying plots, making it easy to build complex visualizations programmatically.

- 6Convenience functions: Matplotlib includes various convenience functions and higher-level plotting interfaces, such as the pyplot interface, for creating simple plots with minimal code.

39. How do you perform web scraping using the BeautifulSoup library?

BeautifulSoup is a Python library used for web scraping purposes to extract data from HTML and XML documents. Here's how to use BeautifulSoup for web scraping:

- 1First, install the required libraries: pip install beautifulsoup4 requests

- 2Import the libraries in your Python script

- 3Parse the response content using BeautifulSoup

- 4Extract the desired data using BeautifulSoup's methods for navigating and searching the parsed HTML/XML document. For example, to find all the links on the page, you can use the find_all() method

- 5You can also use methods like find(), select(), and select_one() to locate specific elements based on their tags, attributes, or CSS selectors. Additionally, you can extract text, attributes, or modify the parsed document using BeautifulSoup's API.

- 6Process the extracted data as needed (e.g., clean, store, or analyze).

While web scraping, always ensure that you comply with the target website's terms of service, robots.txt file, and respect the website's request rate limits.

40. Describe the primary use cases for the asyncio library.

The asyncio library is a core Python library that provides an asynchronous I/O framework for concurrent programming using coroutines. The primary use cases for the asyncio library include:

- 1Asynchronous networking: asyncio provides an event loop and async I/O primitives for writing high-performance network servers and clients, such as web servers, proxies, and chat applications.

- 2Concurrency and parallelism: The library allows you to run multiple tasks concurrently without the need for threads or processes, improving the performance and responsiveness of I/O-bound applications.

- 3Web scraping and crawling: asyncio can be used for efficient web scraping and crawling tasks by concurrently fetching and processing multiple web pages or API endpoints.

- 4Integrating with other asynchronous libraries: asyncio can be used in conjunction with other asynchronous Python libraries, such as aiohttp, aiomysql, or aioredis, to build scalable and high-performance applications.

- 5Task scheduling and coordination: The library provides tools for scheduling and coordinating tasks, such as timers, queues, and synchronization primitives, enabling complex workflows and concurrency patterns.

- 6Implementing custom asynchronous protocols: asyncio's low-level APIs allow you to implement custom asynchronous protocols and transport layers for specialized use cases or to integrate with non-Python systems.

Debugging and testing

41. How do you use the Python debugger (pdb) to debug your code?

To use the Python debugger (pdb) for debugging your code, follow these steps:

- 1Import the pdb module by adding the following line in your code

- 2Set a breakpoint in your code where you want to start debugging by adding the following line: pdb.set_trace(). This will pause the execution of your code at the specified point, and you can then interact with the debugger in the console.

- 3Run your Python script. When the breakpoint is reached, the debugger will start, and you can enter various commands to inspect variables, step through the code, or continue execution. Some useful pdb commands include:

n (next): Execute the current line and move to the next one.

s (step): Step into a function or method call.

c (continue): Continue execution until the next breakpoint or the end of the script.

q (quit): Exit the debugger and terminate the script.

p (print): Print the value of a variable or expression.

l (list): List the source code around the current line.

42. What is the difference between a unit test and an integration test?

A unit test focuses on a single, isolated piece of code, such as a function or a class method, and tests its behavior under various conditions. It ensures that the individual code components are working correctly. Unit tests are typically fast, easy to write, and have a narrow scope.

An integration test, on the other hand, examines how different components of a system work together, such as interactions between functions, classes, or external services. Integration tests can involve multiple components, layers, or even systems, and they validate the functionality of the entire system or subsystem. Integration tests are generally slower and more complex than unit tests, as they require setting up the environment and dependencies necessary to test the interactions.

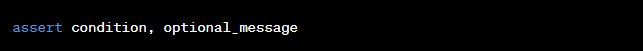

43. Explain the use of the assert statement in Python.

The assert statement in Python is used to verify if a given condition is True. If the condition is True, the program execution continues normally. However, if the condition is False, the program raises an AssertionError exception. This can be helpful in detecting errors or unexpected situations in your code during development or testing. An assert statement has the following syntax:

Here, condition is the expression to be evaluated, and optional_message is an optional message to be included with the AssertionError if the condition is False.

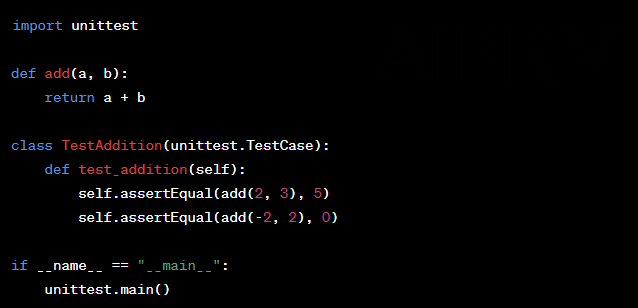

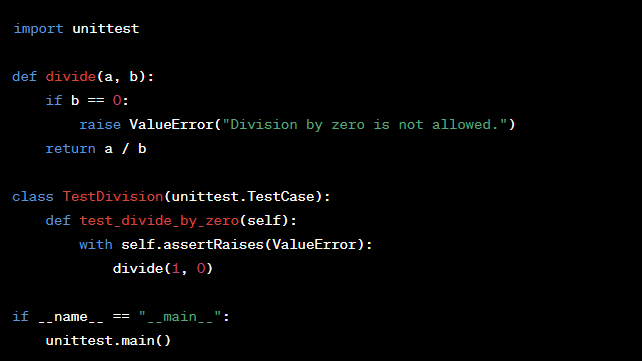

44. How do you write a simple test case using the unit test module in Python?

- 1Import the unittest module.

- 2Define a test class that inherits from unittest.TestCase.

- 3Write test methods within the test class, each starting with the word "test".

- 4Use the assert methods provided by the unittest.TestCase class to validate the expected outcomes.

- 5Run the test suite using unittest.main() or by executing the script with the -k unittest flag.

45. What are the key features of the pytest testing framework?

The pytest testing framework is a popular alternative to the built-in unittest module in Python. Some of its key features include:

- 1Concise test function syntax: Tests can be written as simple functions instead of requiring test classes.

- 2Powerful assertions: assert statements can be used directly, with pytest providing detailed error messages when tests fail.

- 3Fixtures: A powerful mechanism for setting up and tearing down resources shared across multiple tests.

- 4Parametrization: Easy test case parametrization to run the same test with different inputs and expected outputs.

- 5Plugins: Extensibility through a wide variety of plugins for various use cases, such as parallel test execution, test coverage, and more.

- 6Integration with other tools: Pytest can work seamlessly with other testing tools like mock, coverage.py, and many CI/CD platforms.

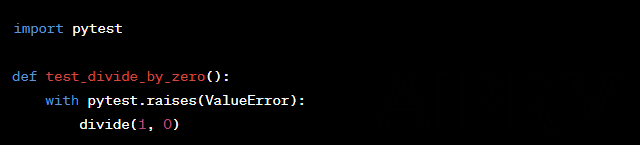

46. How do you test exceptions in Python?

To test exceptions in Python using the unittest module, you can use the assertRaises method or the assertRaises context manager. Here's an example:

In pytest, you can use the pytest.raises context manager to test exceptions:

47. Explain the concept of test-driven development (TDD) and how it's implemented in Python.

Test-driven development (TDD) is a software development methodology in which you write tests before implementing the code. TDD involves the following steps:

- 1Write a failing test for a specific feature or functionality.

- 2Implement the code to make the test pass.

- 3Refactor the code to improve its quality and maintainability without changing its behavior.

- 4Repeat the process for each new feature or functionality.

In Python, you can implement TDD using testing frameworks like unittest or pytest. The key is to start by writing a test that fails, then writing the minimum amount of code to make the test pass, and finally refactoring the code for better quality while ensuring the test still passes.

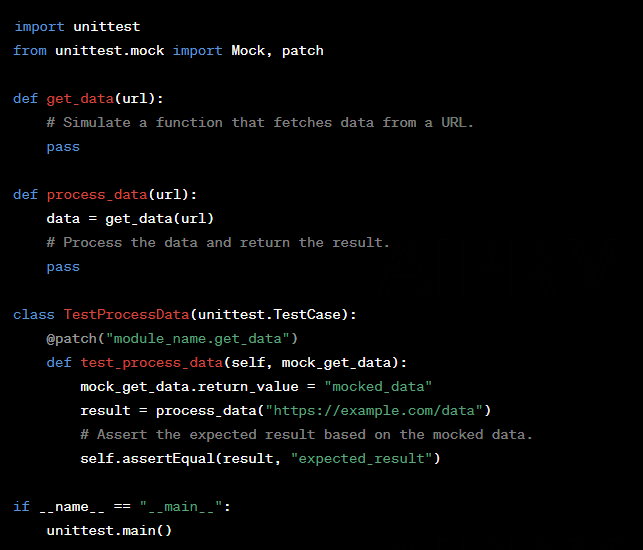

48. How do you mock objects or functions in Python tests?

In Python, you can use the unittest.mock module to create mock objects or functions in your tests. The Mock class allows you to create mock objects with customizable behavior, while the `patch` function can be used as a decorator or context manager to temporarily replace objects or functions with mocks during the test. Here's an example of mocking a function:

49. Describe the use of code coverage tools, such as coverage.py, in assessing test coverage.

Code coverage tools, like coverage.py, are used to measure the percentage of your code that is executed by your test suite. This helps identify areas of your code that are not being tested, which can be a potential source of bugs. To use coverage.py, follow these steps:

- 1Install coverage.py using pip install coverage.

- 2Run your tests with coverage.py, e.g., coverage run -m unittest discover. This will execute your tests and collect coverage data.

- 3Generate a coverage report using coverage report or coverage html. This will show the coverage percentage for each module and the overall project.

By analyzing the coverage report, you can identify areas of your code that need more tests, ensuring that your test suite is more comprehensive and reliable.

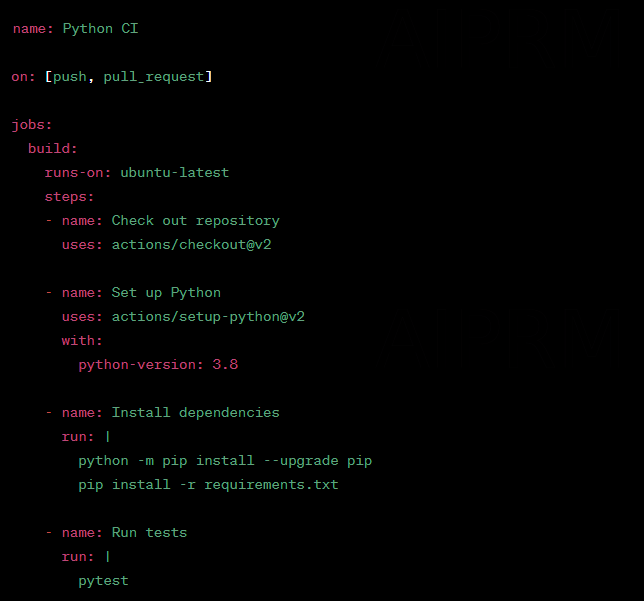

50. How do you set up and run tests in a continuous integration (CI) environment, such as Jenkins or GitHub Actions?

Setting up and running tests in a CI environment involves the following steps:

- 1Configure your CI platform (e.g., Jenkins or GitHub Actions) to build and test your Python project upon changes to the source code.

- 2Install the required dependencies, such as Python and any necessary packages, in the CI environment.

- 3Execute your test suite using your preferred test runner (e.g., unittest, pytest, or nose).

- 4Generate a test report and coverage report, if desired.

- 5Configure the CI platform to notify developers of build and test failures or to block merging of code changes if tests fail.

Here's an example of a GitHub Actions workflow for a Python project using pytest:

Performance optimization

51. Explain the differences between a list comprehension and a generator expression in Python, and discuss their impact on performance.

List comprehensions and generator expressions are both used to create new sequences in a concise and readable way. However, they have different performance characteristics. List comprehensions create a new list in memory, while generator expressions create a generator object that yields values on-the-fly as they are requested.

List comprehensions have a faster execution time but consume more memory, as they generate the entire list at once. Generator expressions are more memory-efficient, as they yield one value at a time and don't store the entire sequence in memory. When working with large datasets or memory constraints, generator expressions are a better choice.

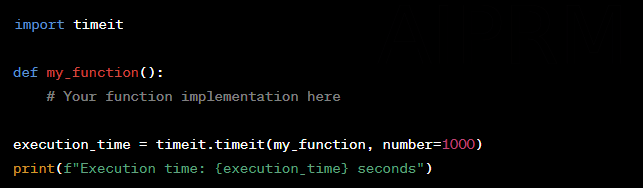

52. How do you use the timeit module to measure the performance of a Python function?

The timeit module provides a simple way to measure the execution time of small bits of Python code. It can be used as follows:

The timeit.timeit() function takes two arguments: the function to be measured and the number of times it should be executed (default is 1,000,000). The function returns the total execution time in seconds.

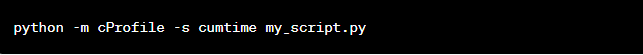

53. Describe the process of profiling Python code to identify performance bottlenecks.

Profiling is the process of measuring the performance of a program and identifying bottlenecks or areas that can be optimized. The built-in cProfile module can be used to profile Python code. It provides a detailed report of how long each function takes to execute and how many times it's called.

To profile your code, run the following command:

This will execute my_script.py and generate a report sorted by the cumulative time spent in each function. Analyzing this report can help you identify performance bottlenecks and optimize your code accordingly.

54. What are some common techniques to optimize the performance of Python code?

- 1Use built-in functions and libraries, as they are usually optimized for performance.

- 2Choose the right data structures for your problem (e.g., sets for membership tests, deque for queue operations).

- 3Use list comprehensions or generator expressions instead of for loops when possible.

- 4Minimize the use of global variables and avoid using nested loops.

- 5Utilize caching with functools.lru_cache for memoization of expensive function calls.

- 6Optimize memory usage with __slots__ in classes and by using appropriate data structures.

- 7Use efficient algorithms and optimize your code logic.

- 8Profile your code to identify bottlenecks and optimize them.

- 9Consider using parallelism, concurrency, or a JIT compiler like PyPy to boost performance.

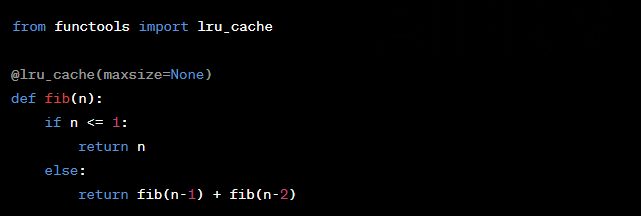

55. How do you use the functools.lru_cache decorator for caching in Python?

The functools.lru_cache decorator is a simple way to add caching (memoization) to a function. It caches the results of function calls so that subsequent calls with the same arguments return the cached result instead of recomputing the value. This can significantly improve the performance of functions with expensive computations, especially for recursive functions.

The maxsize argument determines the maximum number of cached results. Setting it to None means there is no limit to the cache size.

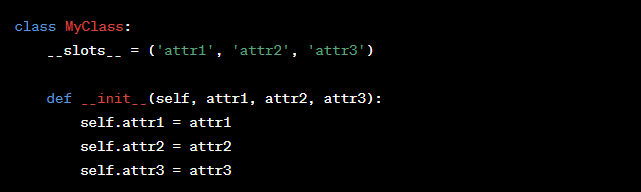

56. Explain the role of the __slots__ attribute in a Python class and its impact on memory usage.

The slots attribute in a Python class and its impact on memory usage:

In Python, classes have a built-in dictionary, __dict__, that stores their attributes. The dictcan consume a significant amount of memory, especially for large numbers of instances. Theslots` attribute allows you to explicitly declare the instance variables in a class, which can lead to memory savings.

By defining __slots__ in your class, you tell Python not to use a __dict__ and instead use a more memory-efficient data structure to store instance attributes. This can reduce memory overhead, particularly for classes with a large number of instances.

Here's an example:

Keep in mind that using __slots__ can have some limitations, such as not being able to add new attributes at runtime or not supporting multiple inheritance well.

57. How do you handle large datasets efficiently using Python's built-in data structures or libraries like NumPy and Pandas?

Handling large datasets efficiently using Python's built-in data structures or libraries like NumPy and Pandas:

When working with large datasets, it's crucial to use the right data structures and libraries to optimize memory usage and processing speed.

- 1Use built-in data structures like lists, tuples, sets, and dictionaries as appropriate for your use case.

- 2For numerical operations on large arrays, use NumPy, which provides efficient array operations and broadcasting.

- 3For data manipulation and analysis, use Pandas, which offers powerful DataFrame and Series objects for handling large datasets with complex structures.

- 4Use generators or iterator-based approaches for processing large datasets to avoid loading the entire dataset into memory.

- 5Optimize memory usage with the appropriate data types (e.g., using int8 instead of int64 when possible).

- 6Consider using memory-mapped files or databases for handling very large datasets that don't fit into memory.

- 7Use parallel processing or multiprocessing to speed up data processing tasks.

58. What are some techniques for optimizing the performance of a Python web application?

- 1Use a production-ready WSGI server (e.g., Gunicorn or uWSGI) to serve your application.

- 2Implement caching at various levels (e.g., HTTP caching, server-side caching, database caching) to reduce the load on your application and improve response times.

- 3Optimize database queries and use an Object-Relational Mapper (ORM) like SQLAlchemy or Django ORM to manage database connections efficiently.

- 4Use a Content Delivery Network (CDN) to serve static assets, reducing the load on your server and decreasing latency for users.

- 5Minimize the use of blocking or CPU-bound operations in your application, and use asynchronous programming with libraries like asyncio or frameworks like FastAPI.

- 6Profile your application to identify performance bottlenecks and optimize them.

- 7Scale your application horizontally by adding more servers or using load balancing.

- 8Implement rate limiting and connection pooling to manage resource usage.

- 9Optimize frontend performance by minifying CSS/JS files, using image compression, and employing lazy loading techniques.

59. How does the use of a Just-In-Time (JIT) compiler, such as PyPy, affect the performance of Python code?

A JIT compiler translates the Python code into machine code at runtime, which can lead to significant performance improvements compared to the standard CPython interpreter. PyPy is an alternative Python implementation that uses a JIT compiler to optimize the execution of Python code.

PyPy can provide substantial speed improvements for many workloads, particularly those with heavy numerical or computational tasks. However, it may not always be faster than CPython, especially for I/O-bound tasks or code that relies heavily on C extension modules.

To use PyPy, simply install it and run your Python script with the pypy command instead of python. It's important to note that while PyPy is mostly compatible with CPython, there may be some differences or incompatibilities with certain libraries or features.

60. Explain the concept of parallelism and concurrency in Python and how they can be used to improve performance.

Parallelism and concurrency are techniques that allow multiple tasks to be executed simultaneously, potentially improving the performance of your Python code.

Parallelism

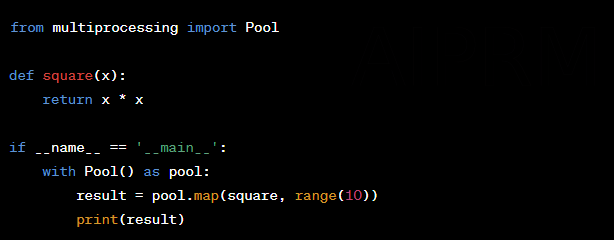

Parallelism involves running multiple tasks concurrently by utilizing multiple CPU cores or processors. In Python, the multiprocessing module provides a way to achieve parallelism by creating separate processes for different tasks. This can be particularly useful for CPU-bound tasks, where multiple cores can be leveraged to execute tasks faster.

Example:

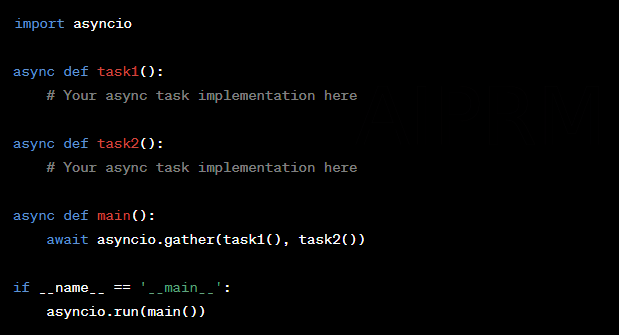

Concurrency

Concurrency is about managing multiple tasks simultaneously, but not necessarily executing them in parallel. In Python, the threading module allows you to create multiple threads to handle different tasks concurrently. However, due to the Global Interpreter Lock (GIL) in CPython, threads may not execute in true parallel. For I/O-bound tasks, using the asyncio module and asynchronous programming can lead to better performance by allowing your program to handle multiple tasks concurrently without blocking.

Example:

Design patterns

61. What is a design pattern, and why are they important in software development?

A design pattern is a reusable and proven solution to a common problem in software design.

Design patterns provide a template for solving similar issues, enabling developers to write more efficient, maintainable, and scalable code.

They are important in software development because they encapsulate best practices, promote code reusability, and facilitate communication among developers by providing a shared vocabulary.

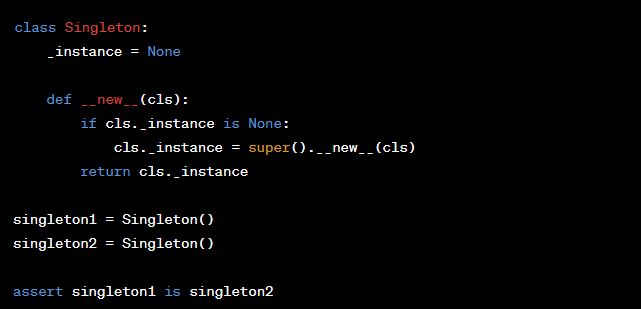

62. Explain the Singleton design pattern and provide a Python implementation.

The Singleton design pattern ensures that a class has only one instance and provides a global point of access to that instance. It is useful when you need to control access to shared resources or when you want to centralize state management.

63. Describe the Factory Method pattern and its use cases in Python.

The Factory Method pattern is a creational design pattern that provides an interface for creating objects in a superclass but allows subclasses to alter the type of objects that will be created. It is useful when you have a complex object creation process or when you want to decouple the object creation from the business logic that uses the objects.

Use cases in Python include creating objects that share a common interface, such as different types of database connectors, file parsers, or API clients.

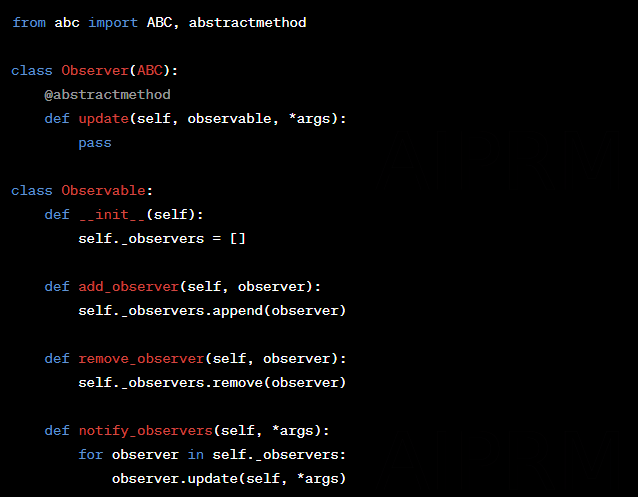

64. How do you implement the Observer pattern in Python?

The Observer pattern is a behavioral design pattern that defines a one-to-many dependency between objects, allowing multiple observers to be notified when the state of a subject changes. In Python, you can use the built-in Observer and Observable classes from the abc module to implement this pattern.

65. Explain the Decorator pattern and how it's used in Python.

The Decorator pattern is a structural design pattern that allows adding new functionality to an object without altering its structure. In Python, decorators are often implemented using functions or classes that take a function or a class as an argument and return a modified version of the original object.

A common use case in Python is to add functionality to functions or methods, such as logging, memoization, or access control, without modifying their code.

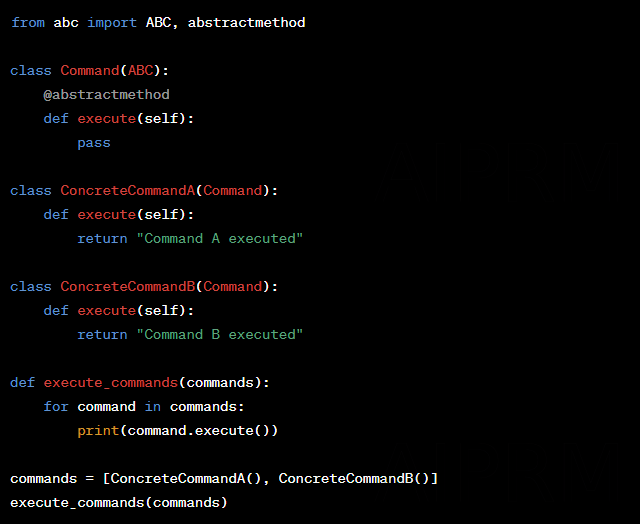

66. What is the Command pattern, and how can it be implemented in Python?

The Command pattern is a behavioral design pattern that turns a request into a stand-alone object, allowing you to parameterize methods with different requests, delay or queue a request’s execution, or support undoable operations. In Python, the Command pattern can be implemented using classes that encapsulate the request and provide a common interface with an execute() method.

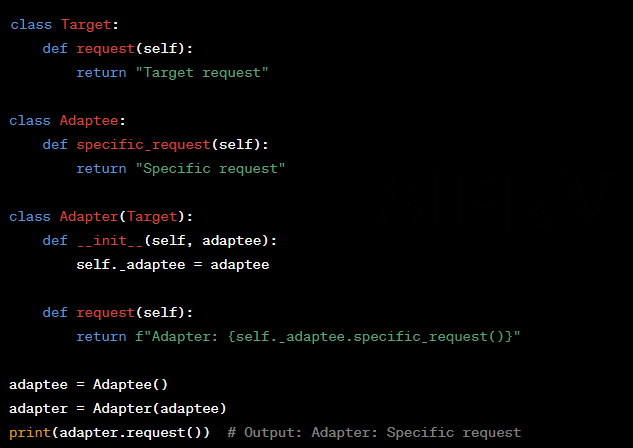

67. Describe the Adapter pattern and provide a Python example.

The Adapter pattern is a structural design pattern that allows incompatible interfaces to work together by converting one interface into another. It is useful when you want to integrate existing components or libraries that have different interfaces.

Here is a Python example of the Adapter pattern:

68. Explain the concept of the Model-View-Controller (MVC) pattern and its application in Python web development.

The Model-View-Controller (MVC) pattern is an architectural design pattern that separates the application logic into three interconnected components:

Model: Represents the data and business logic of the application.

View: Represents the user interface and presentation of the data.

Controller: Handles user input and manages interactions between the Model and the View.

In Python web development, the MVC pattern is used to build scalable and maintainable applications. Popular web frameworks, such as Django and Flask, implement variations of the MVC pattern, such as the Model-View-Template (MVT) or the Model-View-ViewModel (MVVM) patterns, respectively.

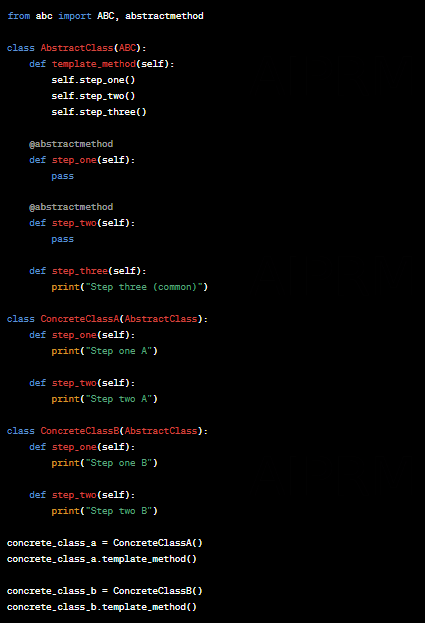

69. What is the Template Method pattern, and how can it be used in Python?

The Template Method pattern is a behavioral design pattern that defines the skeleton of an algorithm in a base class but lets subclasses override specific steps without changing the algorithm's structure. It promotes code reusability and reduces code duplication.

Here is a Python example of the Template Method pattern:

70. Describe the Strategy pattern and its implementation in Python.

The Strategy pattern is a behavioral design pattern that defines a family of algorithms, encapsulates each one, and makes them interchangeable. The pattern allows selecting an algorithm at runtime and promotes loose coupling between the context and the algorithms.

Here is a Python example of the Strategy pattern:

Version control

71. What is the purpose of version control systems in software development?

Version control systems (VCS) help manage changes to source code and other files in a software development project.

They allow developers to track and compare different versions of files, collaborate with other team members, and easily revert to a previous version if needed.

Version control systems provide a history of changes, facilitating better understanding, debugging, and accountability.

They also help prevent conflicts when multiple developers work on the same codebase.

72. Explain the basic Git workflow and its main commands (e.g., init, add, commit, push, pull, branch, merge).

The basic Git workflow consists of the following steps:

- 1git init: Initializes a new Git repository.

- 2git add <file>: Adds specific files to the staging area or git add . to add all changes.

- 3git commit -m "Message": Commits the changes in the staging area with a descriptive message.

- 4git push: Pushes the committed changes to the remote repository.

- 5git pull: Fetches changes from the remote repository and merges them into the current branch.

- 6git branch <branch_name>: Creates a new branch with the given name.

- 7git checkout <branch_name>: Switches to the specified branch.

- 8git merge <branch_name>: Merges the changes from the specified branch into the current branch.

73. How do you resolve merge conflicts in Git?

Merge conflicts occur when two branches have conflicting changes in the same file. To resolve merge conflicts in Git, follow these steps:

- 1Identify the conflicting files by checking the output of the git merge command or using git status.

- 2Open the conflicting files in a text editor and look for the conflict markers (<<<<<<<, =======, and >>>>>>>). These markers indicate the conflicting changes from both branches.

- 3Review the changes and manually resolve the conflicts by deciding which version to keep or by merging the changes as appropriate.

- 4After resolving the conflicts, save the files, and stage them using git add <file>.

- 5Commit the resolved changes with git commit -m "Message" and continue with the Git workflow.

74. Describe the concept of branching and merging in Git and provide a typical use case.

Branching in Git allows developers to create independent lines of development, isolating changes made for a particular feature, bugfix, or experimentation from the main branch (usually master or main).

Merging is the process of integrating changes from one branch into another, combining the work of multiple developers or features.

A typical use case for branching and merging is when a developer creates a new branch for implementing a feature. Once the feature is complete and tested, the developer merges the feature branch back into the main branch.

75. What is a pull request, and how is it used in a collaborative development process?

A pull request is a mechanism used in collaborative development, typically on platforms like GitHub or GitLab, to propose changes from a branch to be merged into another branch (usually the main branch).

It allows developers to review and discuss the proposed changes, request modifications, or approve the changes before merging.

Pull requests help ensure code quality and maintainability by involving the team in the review process, providing a platform for feedback and knowledge sharing.

76. Explain the differences between a centralized version control system (like SVN) and a distributed version control system (like Git).

A centralized version control system (CVCS) relies on a single, central repository where all changes are tracked and managed. Every developer works on a local copy, and changes are pushed and pulled from the central repository.

In contrast, a distributed version control system (DVCS) like Git allows every developer to have a complete copy of the repository with the full history of changes. This enables developers to work independently and perform most operations offline.

Key differences between CVCS and DVCS include:

- 1Network dependency: CVCS requires a constant connection to the central repository for most operations, while DVCS allows developers to work offline and synchronize changes later.

- 2Performance: DVCS is generally faster for most operations since changes are made locally before being pushed to the remote repository.

- 3Collaboration: DVCS makes collaboration easier, as each developer works on their own copy, reducing the risk of conflicts and facilitating branching and merging.

- 4Redundancy and backup: In DVCS, every developer's copy acts as a backup of the entire repository, providing better fault tolerance in case of data loss.

77. How do you use Git to work with remote repositories, such as GitHub or GitLab?

To work with remote repositories in Git, follow these steps:

- 1Clone the remote repository using git clone <repository_url>. This creates a local copy of the repository with the full history of changes.

- 2Make changes to the local repository, using the standard Git workflow (add, commit, etc.).

- 3To sync your local changes with the remote repository, use git push to push your committed changes.

- 4To fetch updates from the remote repository, use git pull or git fetch followed by git merge.

For collaboration, you can create branches, push them to the remote repository, and create pull requests for review and merging.

78. Describe the concept of Git tags and their usage.

Git tags are references to specific points in a repository's history, usually used to mark significant events, such as releases or milestones.

Tags are human-readable and don't change once created, making them ideal for marking stable versions of the codebase.

To create a tag, use git tag <tag_name> <commit_hash> or git tag -a <tag_name> -m "Message" for an annotated tag with a message.

To push tags to a remote repository, use git push --tags. To list all tags, use git tag, and to switch to a specific tag, use git checkout <tag_name>.

79. What are the best practices for writing Git commit messages?

Best practices for writing Git commit messages include:

- 1Writing a short, descriptive summary (50 characters or less) in the first line, followed by a blank line.

- 2Providing a more detailed explanation (if necessary) in subsequent lines, wrapped at around 72 characters.

- 3Using the imperative mood ("Add feature" rather than "Added feature" or "Adds feature").

- 4Explaining what the commit does and why, rather than how.

- 5Including references to related issues or tickets, if applicable.

- 6Keeping the commit messages consistent in style and format throughout the project.

80. How do you use Git to revert changes or go back to a previous commit?

To revert changes or go back to a previous commit in Git, you can use the following methods:

- 1git revert <commit_hash>: Creates a new commit that undoes the changes made in the specified commit. This is useful for maintaining the history and is the preferred method for public repositories.

- 2git reset <commit_hash>: Moves the branch pointer to the specified commit, discarding any subsequent commits. Use --soft to keep the changes in the staging area, --mixed (default) to move changes to the working directory, and --hard to discard changes completely. This method rewrites the history and should be used with caution, especially in shared repositories.

- 3git reflog and git checkout <commit_hash>: `git

Virtual environments and dependency management

81. What is the purpose of virtual environments in Python development?

Virtual environments serve to isolate the dependencies and Python interpreter for a specific project.

This helps prevent conflicts between package versions, allows for easy replication of the project environment, and simplifies dependency management.

Using virtual environments ensures that each project can run with its required dependencies without interfering with other projects or the system-wide Python installation.

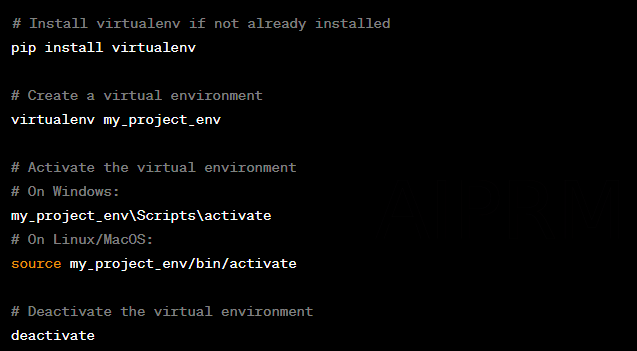

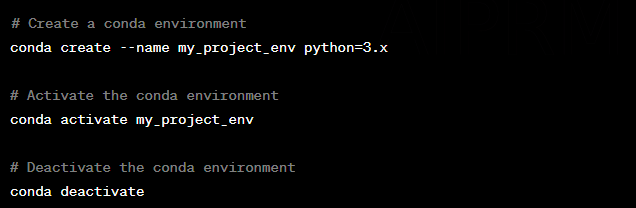

82. How do you create and manage a virtual environment using virtualenv or conda?

With virtualenv:

With conda:

83. Explain the role of the requirements.txt file in a Python project.

The requirements.txt file lists the dependencies needed for a Python project, including their specific versions.

This allows for easy replication of the project environment, ensuring consistent behavior across different systems.

To create a requirements.txt file, run pip freeze > requirements.txt. To install the listed dependencies, use pip install -r requirements.txt.

84. Describe how pip is used for package management in Python.

pip (Python Package Installer) is the default package manager for Python.

It allows developers to install, uninstall, and manage Python packages from the Python Package Index (PyPI) and other sources.

Common pip commands include pip install, pip uninstall, pip list, pip search, and pip freeze.

85. What is the difference between pip and conda in terms of package management?

pip is the default package manager for Python, focused on installing packages from PyPI.

conda, on the other hand, is a cross-platform package manager primarily used with the Anaconda distribution. It can handle packages for multiple programming languages and manage environments.

While pip installs packages from PyPI, conda installs packages from the Anaconda distribution and conda-forge channels.

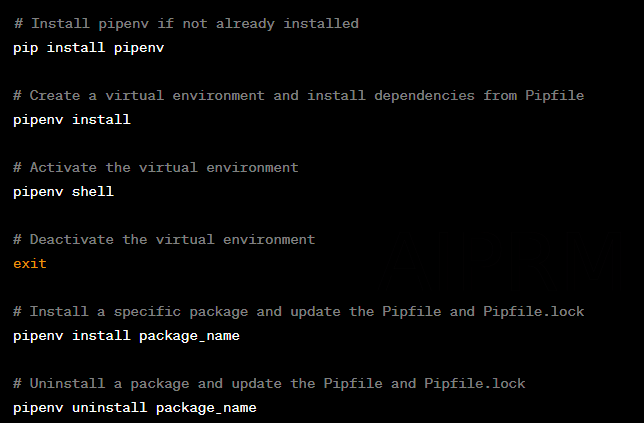

86. How do you use the pipenv tool for managing virtual environments and dependencies in a Python project?

87. Explain the concept of semantic versioning and its importance in managing dependencies.

Semantic versioning is a versioning scheme that uses three numbers (MAJOR.MINOR.PATCH) to indicate the level of changes in a software release.

A change in the major version indicates breaking changes, a change in the minor version indicates backward-compatible new features and a change in the patch version indicates backward-compatible bug fixes.

This system helps developers understand the impact of upgrading dependencies, ensuring that updates do not introduce unexpected issues.

88. What are the benefits of using a tool like Poetry for dependency management in Python projects?

Poetry simplifies dependency management by handling virtual environments, dependency resolution, and package building.

It uses the pyproject.toml file to manage dependencies, allowing for easy replication of project environments. Poetry also provides a clear way to manage development and production dependencies, reducing the risk of deploying unnecessary packages.

Moreover, Poetry's lock file (poetry.lock) ensures that the exact package versions are used across different systems, promoting consistency and reproducibility.

89. How do you handle conflicting dependencies in a Python project?

To handle conflicting dependencies, follow these steps:

- 1Identify the conflicting packages and their required versions.

- 2Check if the dependent packages can be safely upgraded or downgraded to compatible versions without breaking the project.

- 3If a direct resolution is not possible, consider using alternative packages that provide similar functionality without causing conflicts.

- 4If the conflict arises from a subdependency, check if it is possible to override the subdependency version in your project's dependency management configuration.

- 5In some cases, it might be necessary to isolate conflicting dependencies in separate environments or create custom solutions to address the conflicts.

90. Describe the process of deploying a Python application with its dependencies.

To deploy a Python application with its dependencies, follow these steps:

- 1Ensure that your project has a requirements.txt file (or equivalent, e.g., Pipfile or pyproject.toml) listing all necessary dependencies and their versions.

- 2Set up a virtual environment on the target system (e.g., using virtualenv, conda, pipenv, or poetry).

- 3Activate the virtual environment and install the dependencies from the requirements.txt file (or equivalent) using the appropriate package manager (e.g., pip, conda, pipenv, or poetry).

- 4Package your Python application, if needed, using a tool like setuptools, wheel, or flit.

- 5Copy your Python application and any required configuration files to the target system.

- 6Ensure that the target system has the necessary services and configurations (e.g., a web server, a database, or environment variables) to run your application.

- 7Start your Python application within the virtual environment, ensuring that it uses the correct interpreter and dependencies. Depending on the deployment method, this might involve using a process manager like systemd, supervisord, or a containerization platform like Docker.

Code readability and documentation

91. What are the main guidelines in PEP 8, the Python style guide?

- 1Indentation: Use 4 spaces per indentation level.

- 2Line length: Limit lines to a maximum of 79 characters.

- 3Blank lines: Separate top-level functions and class definitions with two blank lines, and method definitions within a class with one blank line.

- 4Imports: Place imports on separate lines and at the top of the file, grouped in the following order: standard library imports, related third-party imports, and local application/library-specific imports.

- 5Whitespace: Avoid extraneous whitespace and use it appropriately, e.g., around operators, after commas, and inside parentheses.

- 6Naming conventions: Use lowercase names with underscores for variables and functions, CamelCase for classes, and uppercase with underscores for constants.

- 7Comments: Write clear and concise comments that explain the code's purpose and any non-obvious decisions.

- 8Docstrings: Write docstrings for all public modules, functions, classes, and methods.

- 9Keep code simple and avoid overusing complex language features.

92. Explain the importance of code readability in Python development.

Code readability is of paramount importance in Python development, as it directly impacts the maintainability, collaboration, and overall quality of the code. The Python language itself (and Python frameworks) was designed with readability in mind, emphasizing simplicity and clarity. Here are several reasons why code readability is crucial in Python development:

Maintainability: Readable code is easier to understand and maintain. When code is clear and well-structured, developers can quickly comprehend its logic, making it easier to modify, fix bugs, or add new features. This reduces the time and effort required for maintenance, ultimately leading to more efficient and cost-effective development.

Collaboration: In most software development projects, multiple developers work together on the same codebase. Readable code allows team members to understand each other's work more effectively, enabling them to collaborate more efficiently and produce higher-quality software. Poorly written code can lead to confusion, misunderstandings, and increased development time.

Onboarding: When new developers join a team, readable code helps them get up to speed more quickly. If the code is easy to understand, they can familiarize themselves with the project and start contributing sooner, improving the overall productivity of the team.

Debugging: Readable code makes it easier to identify and fix bugs. When the code is well-structured and easy to follow, developers can more easily trace the source of a problem and apply the necessary fixes. This results in more stable and reliable software.

Scalability: As a project grows in size and complexity, readable code becomes even more critical. Well-organized code is easier to refactor and optimize, making it more adaptable to changing requirements and facilitating the addition of new features.

Code Reviews: Readable code simplifies the code review process. When code is easy to understand, reviewers can more effectively evaluate its quality, identify potential issues, and suggest improvements. This leads to higher-quality code and a more robust final product.

Future-proofing: Clear, well-documented code is more likely to be reusable and extendable in the future. As technology evolves and new requirements emerge, readable code can be more easily adapted to meet these needs, providing long-term value to the project and organization.

93. How do you write a docstring for a Python function or class?

To write a docstring for a Python function or class, enclose a descriptive text inside triple quotes immediately after the function or class definition. For example:

94. Describe the role of comments in Python code and best practices for writing them.

The role of comments in Python code is to provide additional context and explanation about the code's purpose, behavior, or specific implementation details. Best practices for writing comments include:

- 1Write clear and concise comments that add value to the code.

- 2Focus on explaining why something is done, not just what is being done.

- 3Keep comments up-to-date as the code evolves.

- 4Avoid redundant comments that merely restate what the code does.

- 5Use comments to clarify complex or non-obvious code sections.

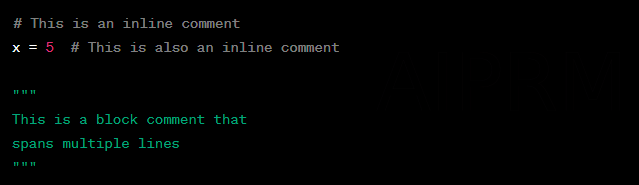

95. What is the difference between inline and block comments in Python?

Inline comments are placed on the same line as the code they explain, while block comments are written on separate lines and usually span multiple lines. Inline comments should be used for short explanations, while block comments are more suitable for longer descriptions or when explaining complex logic.

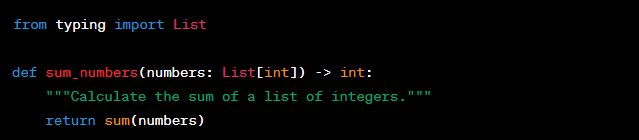

96. How do you use type hints and annotations to improve code readability in Python?

To use type hints and annotations in Python, you can add type information to function arguments and return values using the typing module. This improves code readability by providing clear expectations of the input and output types:

97. Explain the concept of "self-documenting code" and how it can be achieved in Python.

Self-documenting code is code that is easy to understand without requiring extensive comments or documentation. It can be achieved in Python by:

- 1Using descriptive variable, function, and class names.

- 2Following established coding conventions and style guides, such as PEP 8.

- 3Writing short, focused functions that do one thing well.

- 4Using docstrings and type annotations to provide additional context where necessary.

- 5Organizing code into logical modules and packages.

- 6Keeping code simple and avoiding overuse of complex language features.

98. What are some tools that can be used to generate documentation for Python code, such as Sphinx or pdoc?

- 1Sphinx: A powerful and flexible documentation generator that can produce HTML, PDF, ePub, and other formats. It uses reStructuredText as its markup language and supports cross-referencing, indexing, and more.

- 2pdoc: A lightweight documentation generator that automatically generates API documentation from your docstrings. It supports both plain text and Markdown in docstrings and produces HTML output.

- 3Doxygen: A documentation generator for multiple programming languages, including Python. It supports a wide range of output formats and can extract documentation from both comments and docstrings.

99. How do you refactor Python code to improve readability and maintainability?

To refactor Python code to improve readability and maintainability, you can:

- 1Simplify complex or nested expressions by breaking them down into smaller, more readable pieces.

- 2Replace magic numbers and hardcoded values with named constants.

- 3Use more descriptive variable, function, and class names.

- 4Organize code into logical modules and packages.

- 5Remove redundant or dead code.

- 6Consolidate duplicate code into reusable functions or classes.

- 7Apply design patterns where appropriate to simplify and clarify the code structure.

- 8Make use of Python's built-in functions and standard library modules to avoid reinventing the wheel.

100. Describe the role of code reviews in ensuring code readability and quality.

Code reviews play a crucial role in ensuring code readability and quality by allowing team members to evaluate each other's code before it is integrated into the main codebase.

This process promotes knowledge sharing, collaboration, and learning, as well as the identification and correction of bugs, performance issues, and maintainability problems.

Additionally, code reviews help to enforce coding standards and best practices, ensuring that the code is consistent, readable, and efficient across the entire project.

Problem-solving skills

101. How do you approach debugging a complex issue in your Python code?

To approach debugging a complex issue in Python code, I follow these steps:

- 1Reproduce the issue: First, I try to consistently reproduce the error to understand its context and determine its scope.

- 2Understand the problem: I then analyze the error message, traceback, or any other relevant information to get a clear understanding of the problem.

- 3Isolate the issue: I attempt to narrow down the problematic code section by using debugging techniques, such as inserting print statements, using logging, or utilizing a debugger like pdb or an IDE debugger.

- 4Formulate a hypothesis: Based on my observations, I develop a hypothesis about the root cause of the issue.

- 5Test the hypothesis: I modify the code to address the suspected issue and test it to see if the problem is resolved.

- 6Iterate: If the problem persists, I refine my hypothesis and repeat the process until the issue is resolved.

- 7Verify: Once the issue is fixed, I perform additional tests to ensure the solution is robust and does not introduce new issues.

102. Describe a challenging problem you encountered in a past project and how you solved it using Python.

In a past project, I faced a challenge when working with a large dataset that needed to be processed in real time for a data analytics application. Due to the volume of data and the processing time, the application's performance was severely impacted, resulting in slow response times.

To resolve this issue, I first analyzed the code to identify bottlenecks and found that the algorithm used for processing the data was inefficient. I then researched and implemented a more efficient algorithm, which significantly improved the processing time.

Additionally, I used parallel processing techniques, such as Python's multiprocessing module, to further speed up the data processing. I also implemented a caching mechanism to store intermediate results, reducing redundant computations. As a result, the application's performance improved significantly, providing faster response times and a better user experience.

103. How do you break down a large problem into smaller, more manageable components in Python?

Breaking down a large problem into smaller, more manageable components involves the following steps:

- 1Understand the problem: Clearly define the problem statement and identify the desired outcome.

- 2Identify components: Break the problem down into smaller sub-problems or components that can be addressed independently.

- 3Create a modular design: Design the solution with a modular approach, using functions, classes, or modules to encapsulate the logic for each sub-problem.

- 4Develop incrementally: Tackle each sub-problem one by one, implementing, testing, and refining the code as needed.

- 5Integrate: Combine the individual components to form the overall solution, ensuring that they work together seamlessly.

- 6Test and iterate: Test the complete solution, identify any issues or inefficiencies, and refine the code as needed.

104. How do you choose the right data structures and algorithms for solving a particular problem in Python?

Choosing the right data structures and algorithms involves:

- 1Understanding the problem: Analyze the problem requirements, constraints, and desired outcomes.

- 2Evaluate data structures: Consider the data structures that best fit the problem's needs in terms of organization, storage, and retrieval of data. Consider factors such as time complexity, space complexity, and ease of use.

- 3Evaluate algorithms: Review possible algorithms that can be used to solve the problem, taking into account their efficiency, complexity, and suitability for the specific problem.

- 4Trade-offs: Weigh the trade-offs between different data structures and algorithms in terms of performance, memory usage, and code maintainability.

- 5Implement and test: Implement the chosen data structures and algorithms, and test their effectiveness in solving the problem.

- 6Iterate: If the chosen data structures or algorithms are not optimal, refine your choices and repeat the process until the best solution is found.

105. Explain how you would optimize a slow Python function to improve its performance.

To optimize a slow Python function, I would follow these steps:

- 1Profile: Use profiling tools, such as cProfile or Py-Spy, to identify the bottlenecks in the function.

- 2Algorithm optimization: Review the algorithm used in the function and determine if a more efficient algorithm could be implemented.

- 3Data structures: Evaluate the data structures used in the function and consider more efficient alternatives, if applicable.

- 4Use built-in functions: Replace custom code with built-in Python functions or libraries that are optimized for performance.

- 5Vectorization: If applicable, use vectorized operations with libraries like NumPy or Pandas to speed up computations.

- 6Caching: Implement caching techniques, such as using the functools.lru_cache decorator, to store the results of expensive computations and avoid redundant calculations.

- 7Parallelism and concurrency: If the function can be parallelized, consider using Python's multiprocessing, concurrent.futures, or asyncio modules to improve performance.

- 8Code refactoring: Refactor the code to improve its efficiency, readability, and maintainability.

- 9Test and iterate: Test the optimized function to ensure it meets the desired performance improvements without introducing new issues.

106. How do you handle edge cases and corner cases when designing a solution in Python?

Handling edge cases and corner cases involves the following steps:

- 1Identify potential edge and corner cases: Analyze the problem and think about possible edge and corner cases that may arise, such as unusual inputs, boundary conditions, or unexpected scenarios.

- 2Design robust solutions: When designing the solution, consider how it will handle these edge and corner cases without causing unexpected behavior or errors.

- 3Defensive programming: Employ defensive programming techniques, such as input validation, error handling, and proper exception handling, to ensure the solution is robust and can handle unexpected situations gracefully.

- 4Test edge and corner cases: Write test cases to specifically target edge and corner cases, ensuring that the solution handles them correctly.

- 5Document edge and corner cases: Document the edge and corner cases considered and how the solution handles them, to provide clarity for future maintenance or development.

107. What are some strategies for testing the correctness and efficiency of your Python code?

Some strategies for testing the correctness and efficiency of Python code include:

- 1Unit testing: Write unit tests for individual functions or methods to ensure they produce the expected output for given inputs.

- 2Integration testing: Test how different components of the code work together, ensuring that the overall solution functions correctly.

- 3Regression testing: Test the code after changes are made to ensure that no new issues have been introduced.

- 4Performance testing: Use profiling tools and benchmarking to measure the efficiency and performance of the code, identifying potential bottlenecks or areas for optimization.

- 5Test-driven development (TDD): Write tests before implementing the code, ensuring that the code meets the desired requirements and is tested thoroughly.

- 6Code coverage: Use code coverage tools, such as coverage.py, to measure the extent to which your tests cover your codebase, ensuring that all critical parts of the code are tested.

- 7Stress testing: Test the code under high load or extreme conditions to evaluate its performance and robustness.

- 8Automated testing: Implement automated testing using tools like pytest or unit test to run tests regularly and ensure code quality and correctness.

- 9Code reviews: Conduct code reviews with peers to identify potential issues, improve code quality, and share knowledge.

- 10Continuous integration and continuous deployment (CI/CD): Use CI/CD pipelines to automate the testing, building, and deployment of your code, ensuring that any issues are detected and addressed as early as possible.

108. Describe how you stay up-to-date with the latest Python features, libraries, and best practices.

To stay up-to-date with the latest Python features, libraries, and best practices, I employ the following strategies:

- 1Follow Python's official website, blog, and mailing lists to keep track of new releases, updates, and features.

- 2Read Python-related blogs, articles, and books to learn about new techniques, libraries, and best practices.